Student Projects

VE/VM450

Tobacco Surface DefectS Detection Based on Machine Learning

Sponsor: Fen Hu, AIMS

Team Members: Shengyu Feng, Linxiao Wu, Jiajun Bao, Zhengyuan Dong, Xin Wang

Instructor: Prof. Shouhang Bo

Project Video

Team Members

Team Members:

Shengyu Feng

Linxiao Wu

Jiajun Bao

Zhengyuan Dong

Xin Wang

Instructor:

Prof. Bo Shouhang

Project Description

Problem Statement

Tobacco on cigarette production lines often contains defects like paper and plastic wrappings. Removing these defects is a crucial part of quality control and greatly impacts customer satisfaction. While this is normally done by manual inspection, our project aims to automate the process by taking images of tobacco and use machine learning to detect whether and where defects exist.

Fig. 1 An image of tobacco with defects

Concept Generation

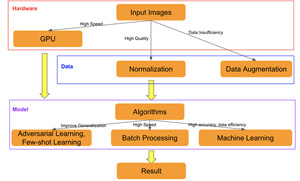

We use Morphological Analysis to generate our concepts. Then we considered our sub-problem requirements which include preprocessing, data efficiency, feature extraction, robustness and parallelism. We categorize concepts according to requirements and ensured at least 3 concepts for each requirement, and then selected some of these to form a tree diagram. These design concepts are applied on different levels to fulfill requirements: GPU on hardware level, normalization and data augmentation on data level, adversarial learning, batch processing and machine learning on model level.

Fig. 2 Tree Diagram

Design Description

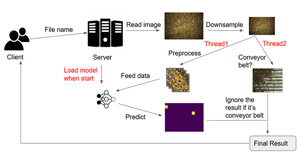

Our design consists of two sides: the client side and the server side. The server will load the model once it starts, then it’ll wait for the requests from the client. Whenever the camera captures a new image from the production line, the client will send the path of the image to the server. When the server recieves the request, it’ll first load the image and downsample it. After that, it’ll create two threads which will run simultaneously. One of the threads will split the images into patches and the model will predict if there’s defect in each. The other one will decide if the image is actually the conveyor belt, if it’s the case then the prediction is invalid. After both threads are finished, the server will send the final result back to the client.

Fig. 3 The whole set-up system

Modeling and Analysis

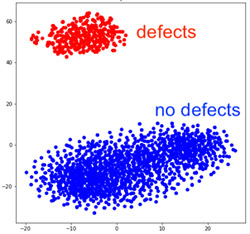

A siamese network [1] with triplet loss is trained to map each image to a vector in the feature space so that positive and negative samples are as separated as possible. In a t-SNE visualization of feature vectors, positive and negative samples are well separated.

Fig. 4 t-SNE visualization of embeddings

Validation

Validation Process:

– Detection Performance:

We measured the detection performance of our model by its accuracy. Given a batch of images, we counted the number of images that are correctly classified and divided that by the total number of images. The higher accuracy is, the better our model is. We needed to achieve an accuracy of 99.99% to meet the requirements from our sponsor.

– Test Runtime:

The time is measured from the point when the images is taken by the industrial camera to the point when our system sends back the result. To meet the requirements, we needed to ensure the runtime is lower than 0.3 second per image.

Validation Results:

According to the validation part, all specifications can be met.

√ Accuracy = 99.99%

√ Test Runtime <=0.23s

√ means having been verified and · means to be determined.

Conclusion

As an result, using triplet network together with data augmentation methods could achieve a high accuracy (99.99%) and is also suitable for production since we could also achieve a good time cost(0.23s) given acceptable budget.

Acknowledgement

en Hu from AIMS

Mian Li, Chengbin Ma, Chong Han, Jigang Wu, Shouhang Bo from UM-SJTU Joint Institute.

Reference

Florian S, Dmitry K, James P (2015) FaceNet: A Unified Embedding for Face Recognition and Clustering, CVPR 2015