Student Projects

VE/VM450

AR in Data Centers

Instructors: Prof. Mingjian Li

Team Members: Shuyi Zhou, Chenyun Tao, Yaxin Chen, Jinglei Xie, Liying Han

Project Video

Team Members

Team Members:

Shuyi Zhou, Chenyun Tao, Yaxin Chen, Jinglei Xie, Liying Han

Instructors:

Prof. Mingjian Li

Project Description

Problem Statement

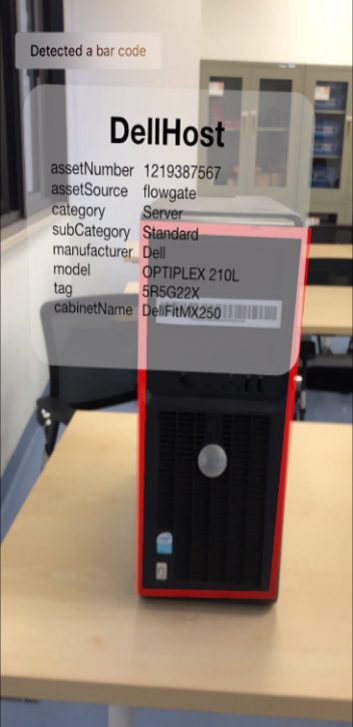

Maintenance and audits are necessary in data centers, so people have developed various computer systems for them [1]. However, most of the systems are not integrated together, and lack user-friendly instructions. This project is to solve the problem by developing an Augmented Reality (AR) App which can retrieve all kinds of information of a data center from the back end database Flowgate developed by Vmware, and display the information in AR interface.

Fig. 1 Information displayed in AR interface

Concept Generation

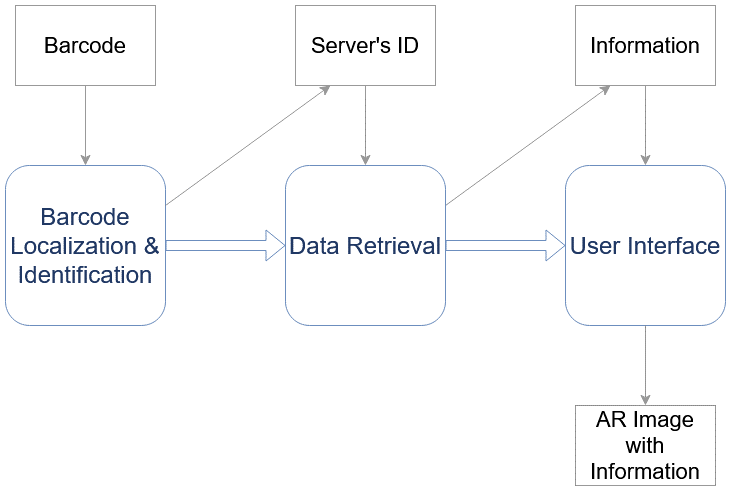

Sub-system concepts include localizing and identifying barcode, fetching data from Flowgate, recognizing devices and racks and marking them in AR interface, and displaying information of devices in AR interface.

Fig. 2 Concept diagram

Design Description

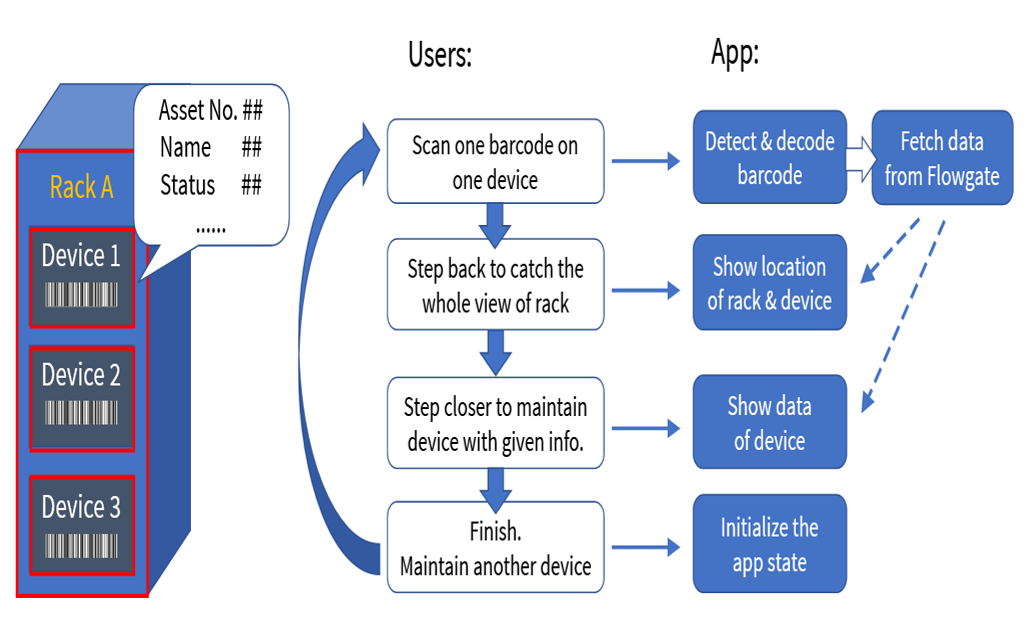

The detailed flowchart of the design is shown in Fig 3. When a user wants to maintain a device in Fig 3, he scans the barcode, and our device starts to detect and decode the barcode. Then the app will use the decoded information to fetch data from Flowgate. Next, the user steps back, to catch the whole view of the rack A. Our app then recognizes the rack and devices, and marks them by those red blocks in AR interface. The location information is provided in data from Flowgate. Then the user steps closer. Our app can show the detailed information of the device, and then the user can start maintaining the device with those information. After the user finishes, he can choose to maintain another device by starting a new session. Our app will be refreshed, and the user can scan another barcode now.

Fig.3 Detailed flowchart of the system

Manufacturing

The project is divided into 3 major parts in manufacturing: Flowgate environment, Android App and iOS App. The backend server Flowgate is developed by VMware, and we install and configure it. For the 2 platforms of the front-end App, we use Java as the programming language for the Andriod App, and swift for the iOS App. To build the AR interface, we use Google’s Sceneform 1.17.1 SDK based on ARCore for Andriod, and Apple’s ARKit for iOS. In addition, ML Kit is used for barcode detecting and decoding in Andriod App. The Apps will be finally shown on Andriod and iOS mobile phones.

Validation

Validation Process:

For barcode localization and identification time and AR image generation time, we add timer in our code, and could read results from log.

For the barcode localization correctness and data retrieval accuracy, we compare the obtained results with the expected ones.

Some other specifications can also be verified using easy experiments.

Validation Results:

According to validation part, most specifications can be met.

√ Barcode localization correctness > 90%

√ AR image generation time < 0.1s

√ Data retrieval accuracy > 99%

√ Frame rate > 15fps

· Temperature < 40˚C

· Barcode localization and identification time < 0.55s √ means having been verified and · means to be determined.

For barcode localization and identification time and AR image generation time, we add timer in our code, and could read results from log.

For the barcode localization correctness and data retrieval accuracy, we compare the obtained results with the expected ones.

Some other specifications can also be verified using easy experiments.

Validation Results:

According to validation part, most specifications can be met.

√ Barcode localization correctness > 90%

√ AR image generation time < 0.1s

√ Data retrieval accuracy > 99%

√ Frame rate > 15fps

· Temperature < 40˚C

· Barcode localization and identification time < 0.55s √ means having been verified and · means to be determined.

Conclusion

AR is useful in data center maintenance and audits. Our project could produce an app that display information with AR in data center on portable devices and ensure short reaction time, information correctness, and comfortable display.

Acknowledgement

Sponsor: Gavin Lu and Yixing Jia from VMware

Mingjian Li, Jigang Wu and Chengbin Ma from UM-SJTU Joint Institute

Xiang Hao, Hongfeng Xu, Hanchen Cui and Zhikang Li from UM-SJTU Joint Institute

Mingjian Li, Jigang Wu and Chengbin Ma from UM-SJTU Joint Institute

Xiang Hao, Hongfeng Xu, Hanchen Cui and Zhikang Li from UM-SJTU Joint Institute

UM-SJTU JOINT INSTITUTE